Data is everywhere; and with rapid development and innovations of technology nowadays, it has became more and more important each day. As it has started to have impact on business models and profitability, many businesses rely heavily on data and analytical report when it comes to making decision for the future of the company.

When we talk about ‘Big Data’, we can not ignore the fact on how fast things are changing in the big data world. Applications that are capable to handle few terabytes today, may have to be able to process petabytes of data next year.

Volume, Variety, and Velocity are the three Vs that basically defining the properties and dimensions of big data. Volume refers to the enormous amount of data; variety refers to the many sources and types of data; and velocity refers to the pace at which data is processed. The expansion of all those three characteristics, not just Volume alone, is believed to be what make data processing a big challenge today. The challenge is not to build data sources anymore, but to organize the ‘ocean of data’ efficiently.

Real-time Data Processing—what is it?

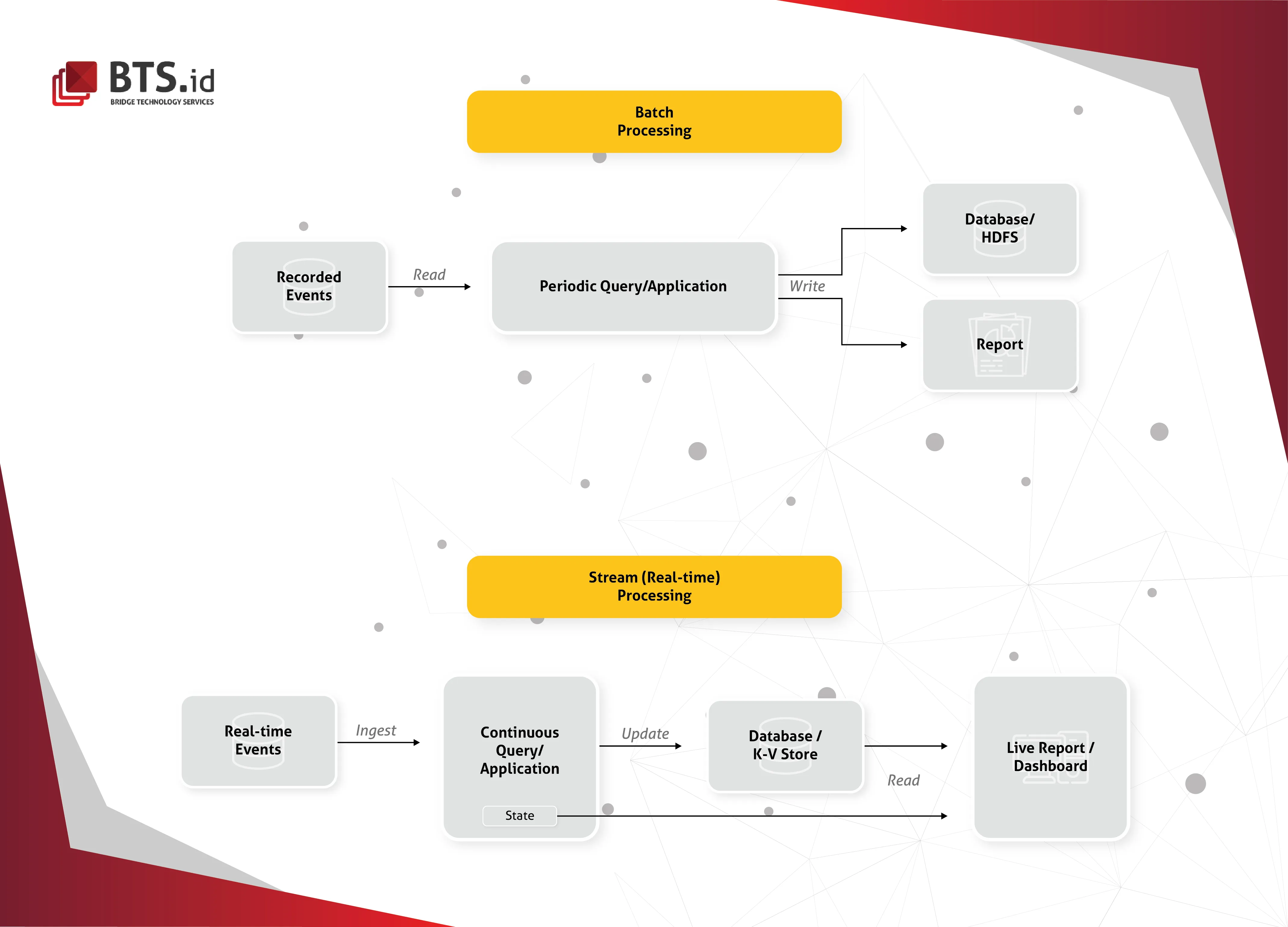

A real-time data processing, to quote Techopedia, is the execution of data in a short time period, providing near-instantaneously output. The processing is done as the data is inputted, so it needs a continuous stream of input data in order to provide a continuous output. For that reason, Real-time Data Processing is also called Stream Processing.

While Batch Data Processing stored the data before processing it, Real-time Data Processing involves continual input, process, and output of data. Data must be processed in a small time period, usually within seconds or milliseconds (or near real-time). This allows the organization the ability to take immediate action, since instantaneous result from input data ensures everything is up-to-date.

Although still in early adoption, Real-time Data Processing holds many potentials for the future. While most organizations use Batch Data Processing, sometimes there are companies that need to process their data immediately. Nowadays, there are many data sources–such as social networks, IoT devices, customer service, financial service transactions, etc–that broadcast critical information in real-time. Real-time requirements usually have very tight deadlines, often followed with consequences such as degradation of service or worst, a complete failure.

A lot of technologies has been developed to answer those requirements. Regardless of technology we eventually choose to process our data, however, it is important to adopt a good architecture first.

A good real-time data processing architecture has to be scalable. Means, not only it has to answer today’s demand well enough, but also adjust to cater a much bigger one in the future. New machine should be able to be added to the system to scale its capacities and capabilities.

It also has to be fault-tolerant. It needs to support batch and incremental updates, and must be extensible.

Microservice Architecture for Big Data

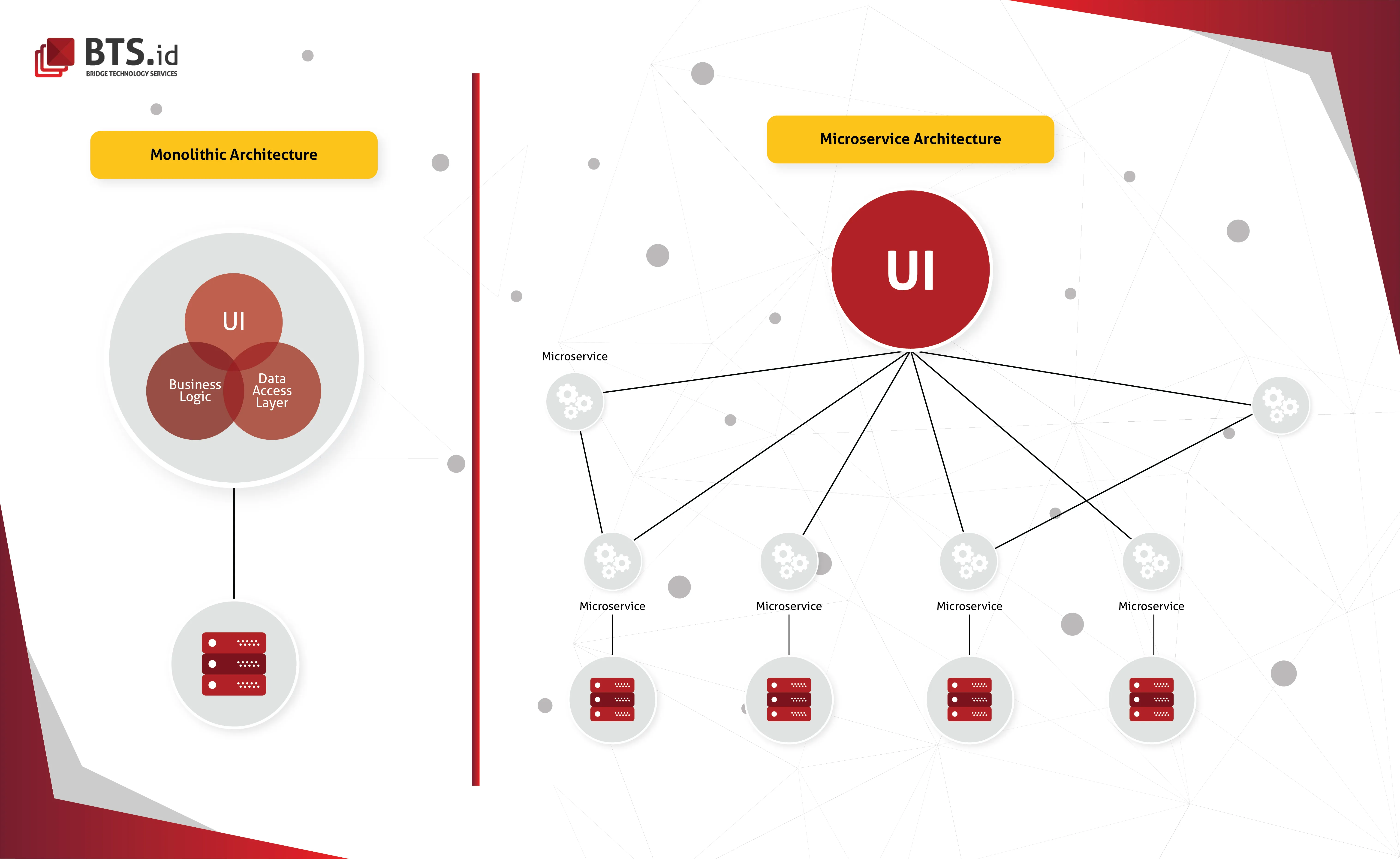

Microservice Architecture is not originally come from the Big Data world, but is slowly picked up by it.

While there is no formal definition of this architectural style, there are several common aspects exist. A microservice architecture utilizes lightweight, modular, and typically stateless component with well-defined interfaces and contracts.

According to Martin Fowler, the microservice architectural style is “an approach to developing a single application as a suite of small services, each running in its own process and communicating with lightweight mechanisms, often an HTTP resource API”.

The idea behind this architecture is to built the application as a set of loosely coupled, collaborating services rather than one large code base, unlike monolith architecture. Monolith architecture requires the entire monolith to be rebuilt and deployed when a change made to a small part of the application, which make people frustrated, hence the development of microservice architecture.

Microservice architecture affect the connection between application and database significantly. Instead of sharing a single database schema with another services, each service posseses its own database schema.

Since monolithic architecture puts all of its functionality into a single process and replicating requires replication of the whole application, which has limitations, a microservice architecture could facilitates a cost-effective scaling.

If you plan to develop a server-side enterprise application that must support a variety of different clients including desktop browsers, mobile browsers, and native mobile applications; expose API tor 3rd party to consume; or integrate with other applications through either web services or a message broker, this architecture might be the answer to solve the question of the right deployment architecture.

A lot of large scale websites including Netflix, Amazon, eBay have proceeded to adopt microservice architecture over the previously-used monolithic architecture. Perhaps because many of its advantages, such as it’s scalable, secure, and reliable. Each service stands on its own, thus it’s easier to maintain and developers can develop each service without interfering another services.

Read Next: Real-Time Data Processing Architecture Part. 2

Build software for your business with the best practice and latest technology with BTS.id (Bridge Technology Services).

Contact us:

Telp : (+62 22) 6614726

Email : info@bts.id

HIT US UP

BRIDGE TECHNOLOGY SERVICES

MORE ARTICLE

BRIDGE TECHNOLOGY SERVICES